Summary

As part of the RE:privacy project, I am reverse engineering and hacking reproductive health apps to interrogate the security and privacy of these products. You can find out more about this project in this post.

Glow & Eve are another highly controversial company that, according to Mozilla Foundation, have many questionable data privacy issues. They've been involved in law suit in which they allegedly failed to "adequately safeguard health information," "allowed access to user's information without the user's consent," and had security problems that "could have allowed third parties to reset user account passwords and access information in those accounts without user consent.". This was spawned by vulnerability research driven by ConsumerReports.org. A brief overview of their findings can be found here in this video:

You can see in this finding that in addition to the account takeover issues & reproductive health leaks, personal data was identified through a vulnerability in Glow's Forum. This security flaw was then fixed by the company. The California State then took the company to court and fined them $250,000 for this negligence. A quote from the judge on this case stated:

“When you meet with your doctor or healthcare provider in person, you know that your sensitive information is protected. It should be no different when you use healthcare apps over the internet. Mobile apps, like Glow, that make it their business to collect sensitive medical information know they must ensure your privacy and security. Excuses are not an option. A digital disclosure of your private medical records is instantaneously and eternally available to the world. Today’s settlement is a wake up call not just for Glow, Inc., but for every app maker that handles sensitive private data.”

The Attorney General's complaint alleges the Glow app:

- Failed to adequately safeguard health information;

- Allowed access to user’s information without the user’s consent; and

- Additional security problems with the app's password change function could have allowed third parties to reset user account passwords and access information in those accounts without user consent.

One of the bugs that ConsumerReports found, lead to forum personal data leakage, which had an overzealous set of data that leads to data privacy issues. They stated:

'we saw dozens of lines of text, which included the post writer’s full name, her email address, her rough location, and a number of details from her health log. We saw her birthdate, expressed as something called a UNIX timestamp, which is easy to convert into a regular date using free online calculators. We also saw her true user ID, which was a long string of numbers. (Every Glow user name gets linked to such a number, which she never normally sees.)... That information could have been enough to open a woman to harassment or identity theft'

In this research, I found an additional vulnerability that lead to a personal data leak in Glow's forum data. Whilst this bug is not the same as the one reported in the ConsumerReport's finding in 2020, the returned data from the vulnerability allowed for another leak of overzealous personal information of the Forum users. With familiarity to ConsumerReport's bug, this data included: First name, last name, age group, location, userID & any images uploaded to the user profile.

Not only does this present an issue with regard to harassment or identity theft, as described by consumer reports, but I believe this may also be a breach of GDPR (this will be explained in the later sections). Neither the less, if I were using this product and this data of me was made available to anybody, I wouldn't be happy about it.

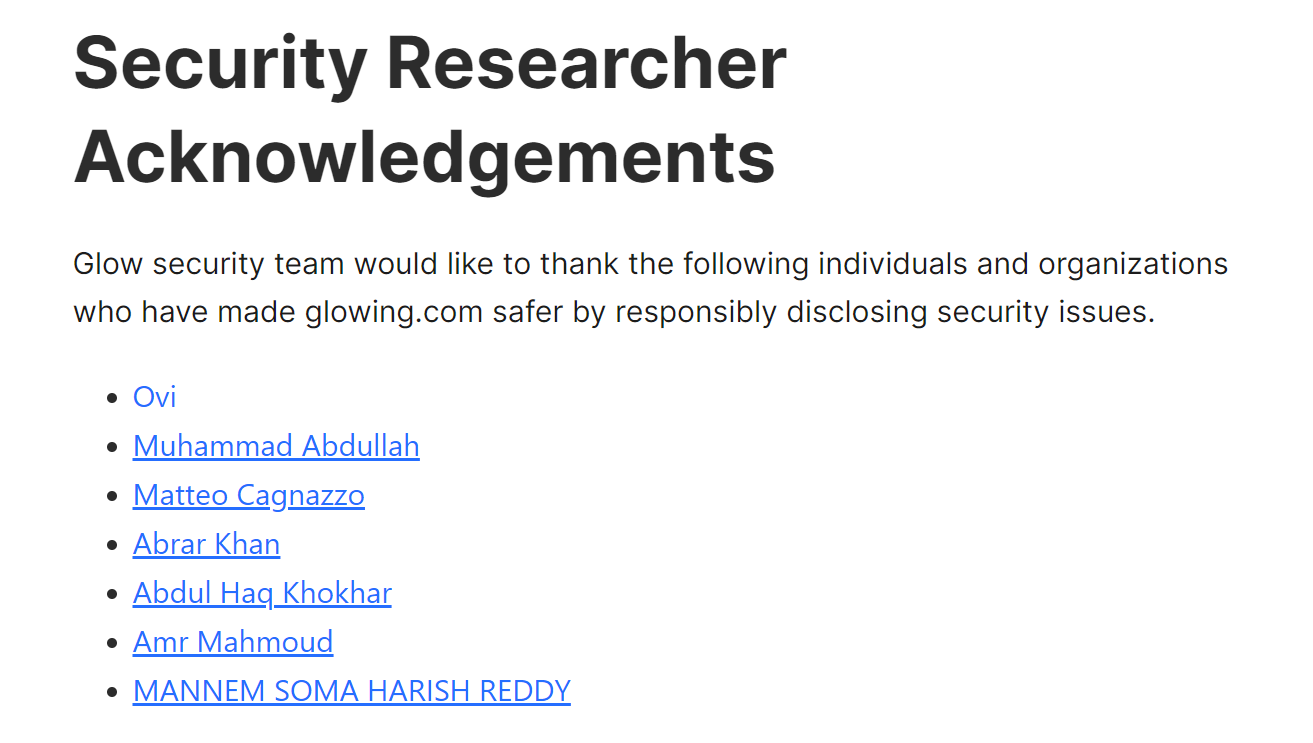

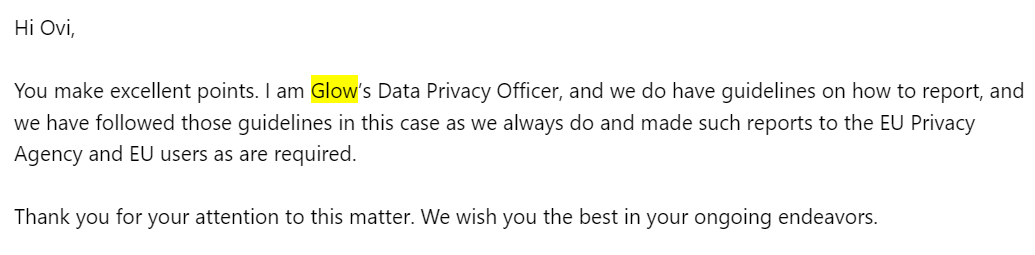

I reported the vulnerability I found to the company with my recommendations on how to fix it. Throughout my discussion with them, they gave mixed signals on whether they agreed they thought it was an issue. They initially denied the vulnerability, whilst then implementing fix I suggested. When I had informed them that I believed the vulnerability to be a GDPR breach, they somewhat agreed and vaguely informed me they had notified EU users & the EU Privacy Agency (however the wording is wishywashy, but I'll leave that for you to decide). I have multiple accounts based in EU across the software and did not receive any such notification. After the fix had been implemented by Glow, they gave me a acknowledgement on their security vulnerability page on their website for the issue and informed me they couldn't offer a bug bounty since they didn't have the money for it.

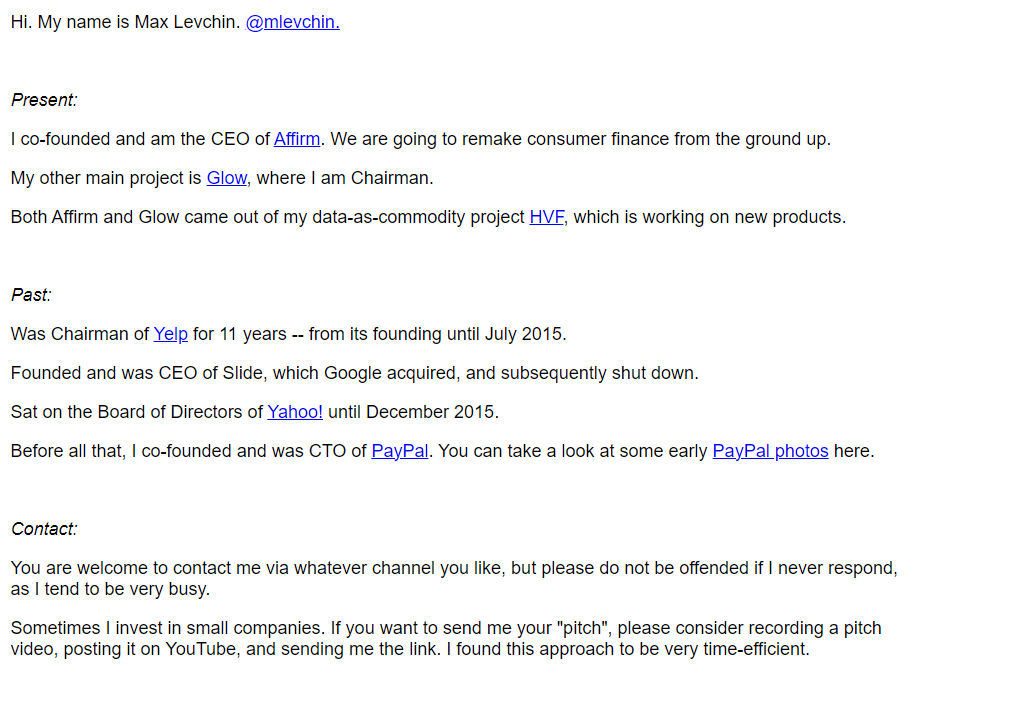

The company is in phase 2 funding, and appears to have sizable investment. The company was spawned out of a VC called HVF (Hard Valuable Fun), one of the chairmen of this VC is Max Levchin an ex-cofounder of Paypal and who openly described Glow as a "data-as-commodity product" which summarizes nicely how this product aims to operate. This summarizes the ethos of big tech.

Despite this report and other security bugs presenting privacy issues in product such as this, it's important to recognize the greater issues at stake here. Glow, and companies like it, are fundamentally selling personal data for profit. When I sent over a proof of this report to Aral Balkan, who's work & philosophy I'm a big fan of, Aral made a point about the greatest vulnerability at stake here, which precedes anything in this report or others like it:

“We’re very concerned with vulnerabilities in apps that process personal data but we usually miss the most important one: how they are funded and what that means for the privacy of that data. Regardless of breaches, etc., Glow is a venture-capital funded startup. That means that it will have to be sold (exit) in order to be successful. So the real worry on everyone’s mind should be, who will buy it and all its data? Elon Musk? Moms for Liberty? Someone worse? The real vulnerability is that Glow, the company, and all its data, are available for sale to anyone who has the money to afford to buy it. And that’s a vulnerability that exists in every venture capital-funded startup. In fact, it’s the backbone of Silicon Valley and Big Tech. ” - Aral Balkan

I vehemently stand with Aral's perspective on this matter. Products like this inherently pose significant threats to society & our civil liberties even before considering the security of the product itself. As Aral suggests, this is how Big Tech works. The privacy of its users is already compromised due to the data ownership model and it's data-as-commodity nature. (Aral is doing amazing things, so please please go check it out here).

The bug

On October 18th 2023, I reported a vulnerability to the company in which I found a IDOR vulnerability that leaks data that I believe combined makes up personally identifiable information of all their users. This vulnerability applied to all 25 million users. After reporting the vulnerability, the company accepted my disclosure and patched it, after seeking a bounty reward I was told "I have to admit that as a startup we cannot afford that". Make of that what you will, but considering they have over 25 million users and multiple seeds of investment, it seems pretty mad to me to be a poor start up that can't pay researchers for fixing security issues in their product.

The company questioned the claim that the data returned from the vulnerability I found was regarded a personally identifiable information. So, before we get into the vulnerability disclosure, I just want to assert rational as to why this is considered personally identifiable data, and what reasonable action should have been taken to let users known of this issue. The vulnerability exposed the following information on each user in the platform:

- First name

- Last name

- Age group (user defined, can be either 13-18, 19-25, 26+)

- Location (user defined, can be specific or general or specific based on whatever the user enters)

- UserID (within software platform)

- Any images uploaded from the user (profile photos)

I.e Jane blogs, age group 13-18, primrose hill, brooklyn, new york, userid guid

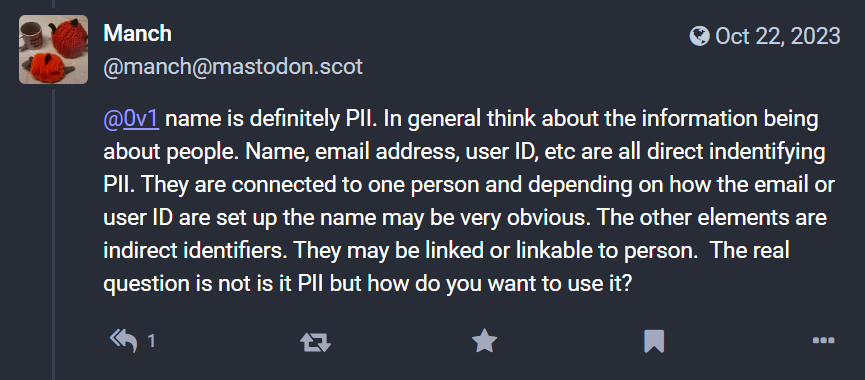

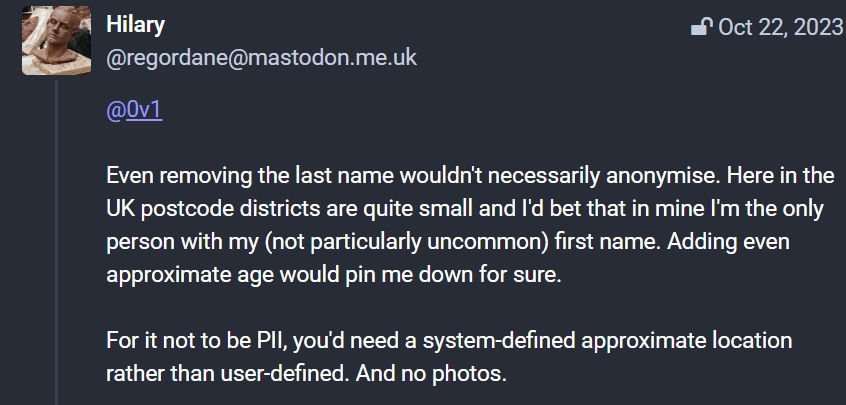

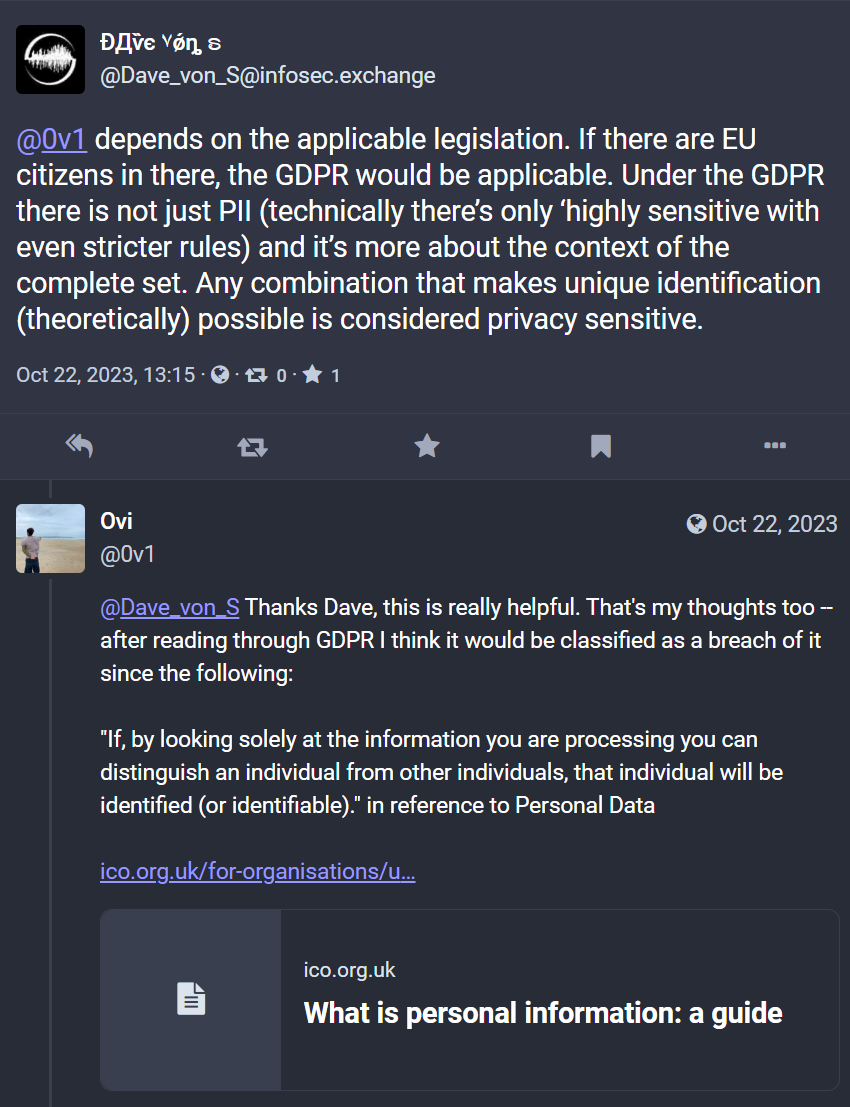

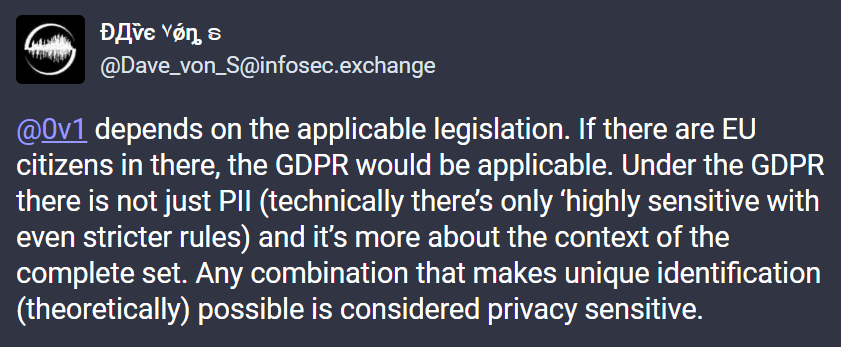

After discussing with friends who work in privacy and folks on my Mastodon channel, I asked if this data would be considered as personally identifiable information under GDPR and reviewed the GDPR guidelines, the following reference points can be found in this piece of GDPR guidance:

>Personal data only includes information relating to natural persons who: can be identified or who are identifiable, directly from the information in question; or who can be indirectly identified from that information in combination with other information.

> If, by looking solely at the information you are processing you can distinguish an individual from other individuals, that individual will be identified (or identifiable). You don’t have to know someone’s name for them to be directly identifiable, a combination of other identifiers may be sufficient to identify the individual.If an individual is directly identifiable from the information, this may constitute personal data.

In this context, I'm referring to the literal term of identifiable information in context to GDPR. Whilst I agree that it is not 'highly' sensitive data, under legislation in the EU, it is considered identifiable data via linkage. GDPR determines this as "personal data", and lack of control of "personal data" that can be used to identify someone is a breach of this legislation.

As an example, this type of data leak, in my understanding and consulting with other researchers, is a breach of GDPR regulation within the EU (which since the application is available in, is expected to be in compliance with).

>Even if you may need additional information to be able to identify someone, they may still be identifiable.

It is also my understanding from these texts that a breach of properly handing personal data, is a breach of GDPR. In accordance with GDPR, it is my understanding that an organization should have to do the following:

- They must have a Data Privacy Officer

- They must have guidelines on how to report

- They must respond to the report

- They must report to an EU privacy agency within 72 hours

- They must inform (possibly) impacted (EU) users

After discussing the vuln with the company, **and them patching it**, they have continued to deny that being able to leak First name, Last name, Age range, Location (user defined) & UserID of all 25+ million users is in breach of any GDPR regulations or in fact a privacy concern at all. Despite, their CTO initially affirming that the points I raised about data privacy and GDPR are 'excellent' & telling me they have notified EU privacy agency & all EU users - which, as far as I'm aware, they haven't.

As described above, I believe this data constitutes enough to be personally identifiable. Let me know in the comments or on Mastodon what you think and if you consider this to be a privacy risk?

Here is some feedback from my friends on my Mastodon channel on others view of this:

As I described above, after explaining the points raised by my research and consulting with friends, like those above, I addressed these issues to the CTO and suggested that they are in breach of GDPR here and must notify EU privacy agency and all EU users.

I received communication from the CTO (I haven't included much of the email to avoid copyright infringement):

This to me, gives me the impression they have indeed reported the leak to EU Privacy Agency & EU users. Despite this, I see no reports of such & see **absolutely zero notification or information to EU users (which includes me) of the leak**.

With that out of the way with and explained, let's move onto the trivial vulnerability. This vulnerability was reported on the 18th of October 2023 and in accordance with responsible disclosure policies, I have waited till after the 18th of January 2024 to release this information (3 months). The issue has now been patched, Glow have refused to accept that this leak contains identifiable information and is a privacy concern to its users. Further to this, the company have admitted that the information should not be available within the API & after a week of reporting they patched it.

IDOR vulnerability in Glow API

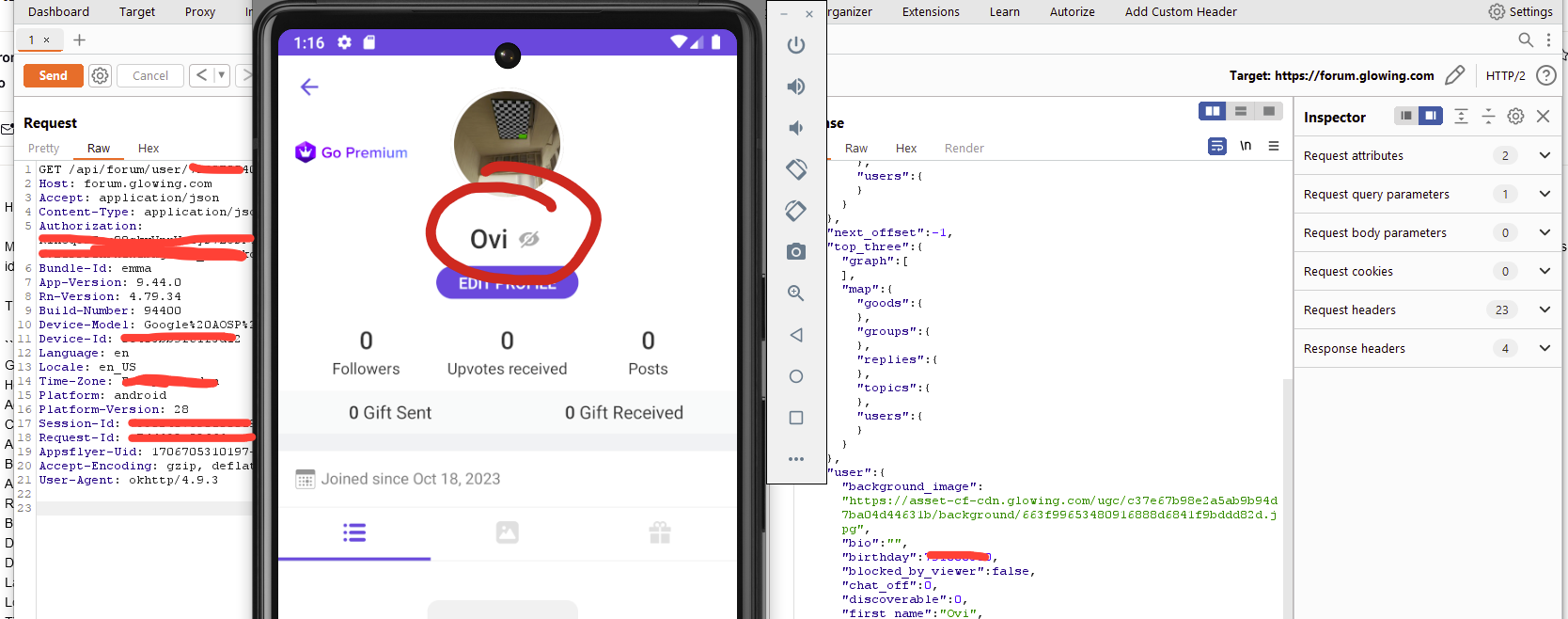

After scanning the API endpoints of the application, I had found the following endpoint "forum.glowing.com".

In this endpoint, the following URI on that host contains a user ID

/api/forum/user/72057594059859526/

It is this API endpoint that insecure direct object reference in allowing the unrestricted & non-rate limited request of any USER ID in the endpoint. As an example, the following request can be made with a USER ID of 72057594059859526.

GET /api/forum/user/72057594059859526/new_feeds_v2?offset=0 HTTP/2

Host: forum.glowing.com

Accept: application/json

Content-Type: application/json

Authorization: REDACTED

Bundle-Id: emma

App-Version: 9.36.1

Rn-Version: 4.75.3

Build-Number: 93601

Device-Model: Google%20Android%20SDK%20built%20for%20x86

Device-Id: REDACTED

Language: en

Locale: en_US

Time-Zone: Europe/London

Platform: android

Platform-Version: 26

Session-Id: REDACTED

Request-Id: REDACTED

Appsflyer-Uid: REDACTED

Accept-Encoding: gzip, deflate

User-Agent: okhttp/4.9.3

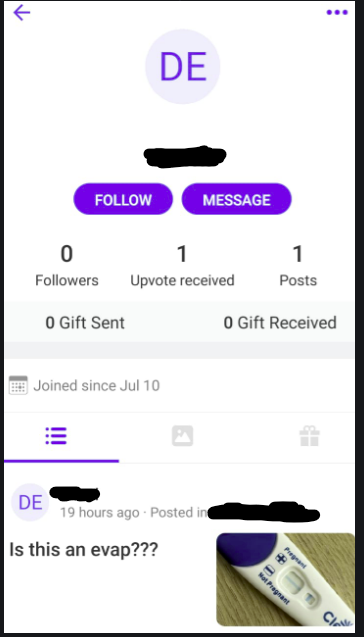

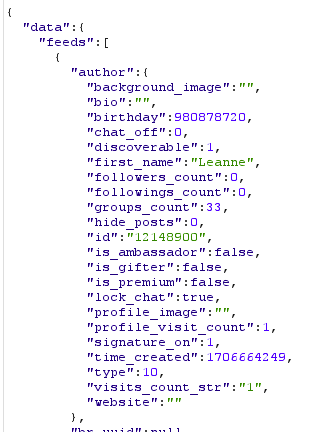

This user-supplied input allows me to return back objects relating to other users. This is of course entirely enumerable in a manor that is not rate limited by the API. By changing the USERID in the request, I am able to see data relating to other users. What's more is the API returns more data than what is presented to the application, which in this case results in the personal information leak. Meaning an attacker can get a full list of names, IDs, age ranges & user defined locations of the entire Glow user database. Glow claim they have 25 million users, all of which are automatically added to the Forum data base when registering (more on that later). The data returned as described in the previous section contains:

- First name

- Last name

- Age range (user defined, can be either 13-18, 19-25, 26+)

- Location (user defined, can be specific or general or specific based on whatever the user enters)- UserID (within software platform)

- Any images uploaded from the user (profile photos)

Here's an example of what is show to the user in the portal. Non the data returned by the API is actually viewable to a regular user. The only data that is used from these objects in the application is the first name, followers, upvotes, posts, images and gifts. Such data, is not a privacy concern.

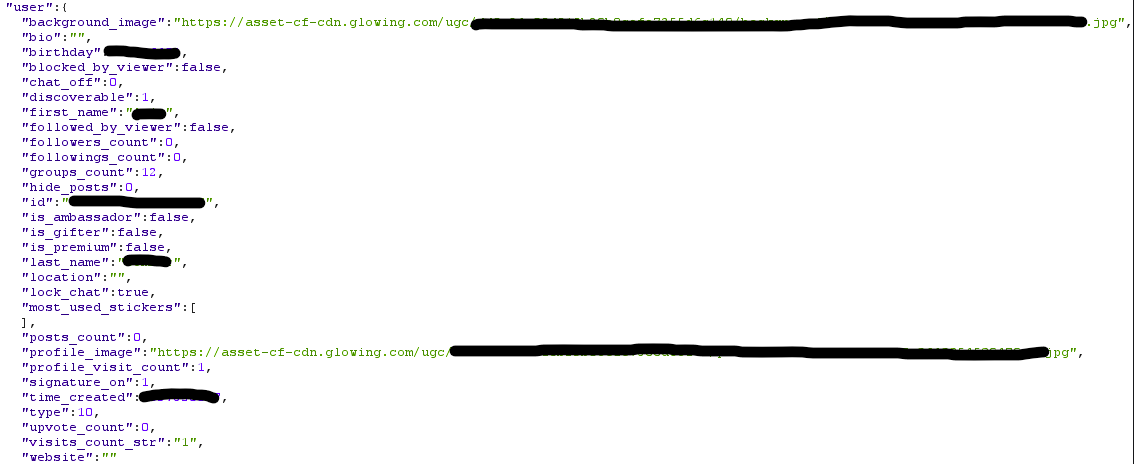

However, when the API request shown above is requested, an example of the following information is returned (you will notice some similarities of this data with the ConsumerReports bug):

This API was not rate-limted, and meant the entirety of the user base could be pulled in an unrestricted manor. It's worth noting here that this is a publicly accessible API endpoint and despite Glow claiming that it's only for "developers". There is no, unauthorized access here, since the auth token given to me by the application has access to this API endpoint, it is entirely authorized data to me.

What's further concerning about this API is that even if a user 'hides' themselves from the forum, the API will still return data for that user. Has I discussed earlier, whenever someone signs up to the app, they are automatically enrolled in the forum. They must manually opt-out. But this doesn't prevent a user from being impacted by this vuln. In this screenshot, you can see that one of my profiles that I changed the privacy settings to specifically hide my profile from the forum. Despite hiding my profile in the privacy settings, the API would still return data on my profile regardless. This again, for me is an additional serious issue for privacy violations. If a user has specifically opted out to be contained in a dataset, whether that be publicly visible in the application OR in the API it should be coalesced.

A note about responsible disclosure & to any claims about this report: I acted with no malicious intent with this data, however, someone could have easily pulled this entire 25 million+ database. I reported the vulnerability immediately upon finding it on October 18th 2023 to their vulnerability disclosure channel & in the week of the 28th of October, the data available within the API was patched. In accordance with industry standards, I have waited 90 days before publicly disclosing this vulnerability, which I notified Glow of my disclosing timeline from the initial report. The vulnerability here was found in a publicly available API, that was accessible with my own user accounts authorization keys.

Follow-up

After reporting the vulnerability, the organization denied to admit that the data attained was personally identifiable and that they did not consider it to be a privacy issue. Please let me know in the comments what you think about this. Despite denying these claimed, they did still patch the "non-linkable data points". In my report, I suggested a remediation of this issue that involved removing Last Names & Locations from the API data, that would resolve any 'identifiable by linage' personal data. In their patch, they indeed did remove these - after reviewing the updated version of the app, the API returns back the following:

Furthermore, despite them thinking it's not an issue, when I discussed releasing these findings after a responsible disclosure period, they asked:

> "we’d rather you not publicize your findings because we don't believe this is a PII leak and this kind of thing can be blown out of proportion and taken out of context by others."

Despite this comment, they stated:

> "Sometimes, as you have seen, we don’t eliminate every risk, but we try to be on the side of the user. If you do decide that you need to make a posting, we kindly ask as a courtesy that you show us the posting in advance so that we can give you our comments on the specific disclosures you make so they are as technically accurate as possible."

Since I am not a privacy expert, you can make your own mind up whether you think this vulnerability was a privacy risk. In my opinion, having that set of data for all 25+ million users, definitely presents a privacy issue. Let me know what you think in the comments.

So, in review of Glow

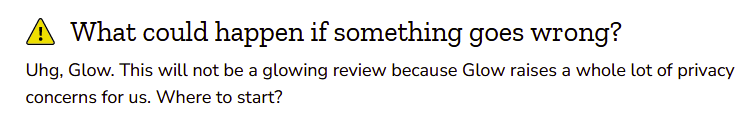

Mozilla Foundation's review of this product it can be summarized by this screenshot of their article:

They gave it a poor rating and advised people consider using this product. I think this research further validates these findings; not only is their privacy policy something that rings alarm bells, but this research resolved also that security of their product may not be up to standard either. Given my interactions with the company and acceptance followed by denial, my impression is that they behold a true capitalistic data-as-commodity model and the feeling I get is that the company doesn't seem to be truly acting in the best interest of the users. Since of course, they are selling people data for profit. The users data is generating them money, and one day, someone will buy it and own all that data. As their founder states, it's a "data-as-commodity" business, just like the rest of Big Tech. If you are considering using this application for your reproductive health data, I would consider other options that do not commoditize or store your data if I were you.

At a global picture, Glow's business at a whole presents huge vulnerabilities to the safety of people's information inherently, due to data-as-commodity tech models. As Aral Balkan eloquently put:

The real vulnerability is that Glow, the company, and all its data, are available for sale to anyone who has the money to afford to buy it.

Supporting the RE:privacy project

Thank you for reading this report. If you wish to find out further about the RE:Privacy project, please see this post here. I am a security researcher that works entirely in the non-profit sector with no affiliation or employment from a corporation. If you think that this work was beneficial to the privacy and safety of the internet, please consider subscribing!

Thanks,

Ovi

![[0x0v1]](https://www.0x0v1.com/content/images/2023/09/0x0v1-1.png)